One recurring question I get asked over and over is:

“How does our implementation of Apache Airflow and data engineering best practices compare to others you have seen?”

My immediate response is often to compare them to other teams I have worked with in the past, but in retrospect, that is a disservice. Two fin-tech firms may be in different stages of implementing Airflow, or one may be stuck on Apache Airflow 1.10 and comparing options to move to AWS MWAA, Google Cloud Composer, or Dagster.

Teams deserve to hear about how competitors implement their solutions, but the real value is found through introspection of their own stack and technical decisions. Within an organization, each data team, whether engineering, analysts, scientists, or machine learning, has a different perspective on how everything works together. Giving my opinion without input will simply muddy the waters.

Since founding Essentl, I have adopted an old practice that directs the focus of teams away from tactical issues and into maximizing their tools through a strategic view. Our team members use practices like this to teach you how to best approach workflow management and how to integrate Apache Airflow with other toolsets. We pair with you and your team to apply best practices and decrease your time to value.

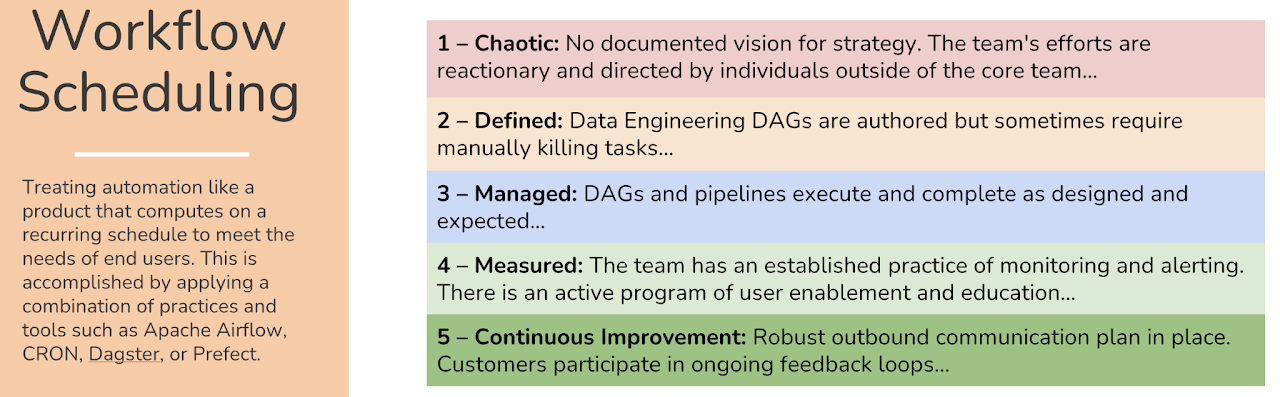

Here is a quick summary of the Workflow Scheduling health marker metric.

Treating automation like a product that computes on a recurring schedule to meet the needs of end users. This is accomplished by applying a combination of practices and tools such as Apache Airflow, CRON, Dagster, or Prefect

Why it matters?

To use this practice, we sit down in a room together and gather enough sticky notes as we can find. Each person takes three colors of sticky notes and a permanent marker. Next, we write a number one through five, in relation to the scale Chaotic to Continuous Improvement, on a blue sticky note. Next, we each write one good thing the team does about Workflow Scheduling on a green sticky note and one thing they need to improve.

Everyone then tapes the notes to the whiteboard, and we compare as a team. Approaching metrics this way gives an unbiased perspective and helps identify our strengths and weaknesses as a group. This activity can re-enforce past successes and identify gaps your team may need to be aware of.

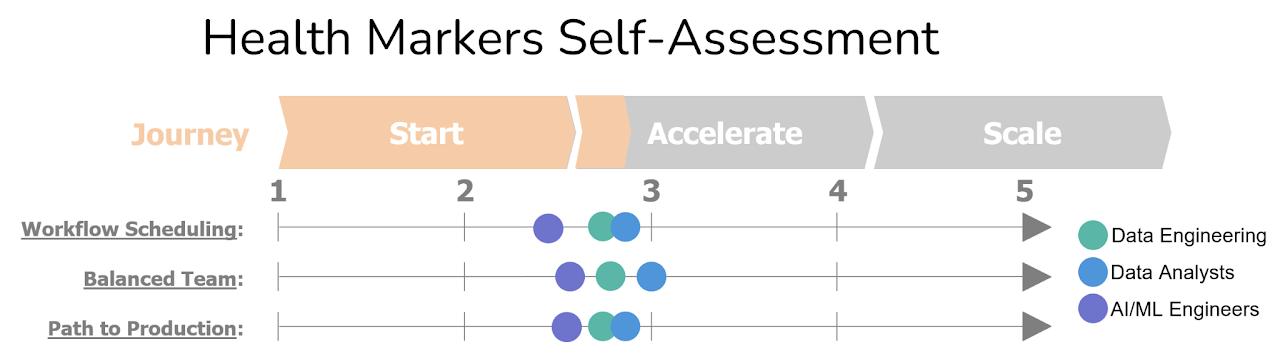

We use nine health markers to create a holistic picture and output for our clients. We separate groups to provide a better view of ratings and how each group perceives the product. In this example below, the Data Engineers rated themselves in between the Data Analysts and AI/ML Engineering teams.

The hidden value gained from these sessions is a common understanding and direct feedback from users. Often data engineering and other teams don’t meet on a regular basis or have common ground. Having our team come and break down these barriers as experts in multiple fields can be a catalyst to your future success.